Hollywood Filmmaking in 2030 – According to the Studios

Manage episode 379395001 series 3037997

Is the embedded video not working? Watch on YouTube: https://youtu.be/UO6e_wMAp_0

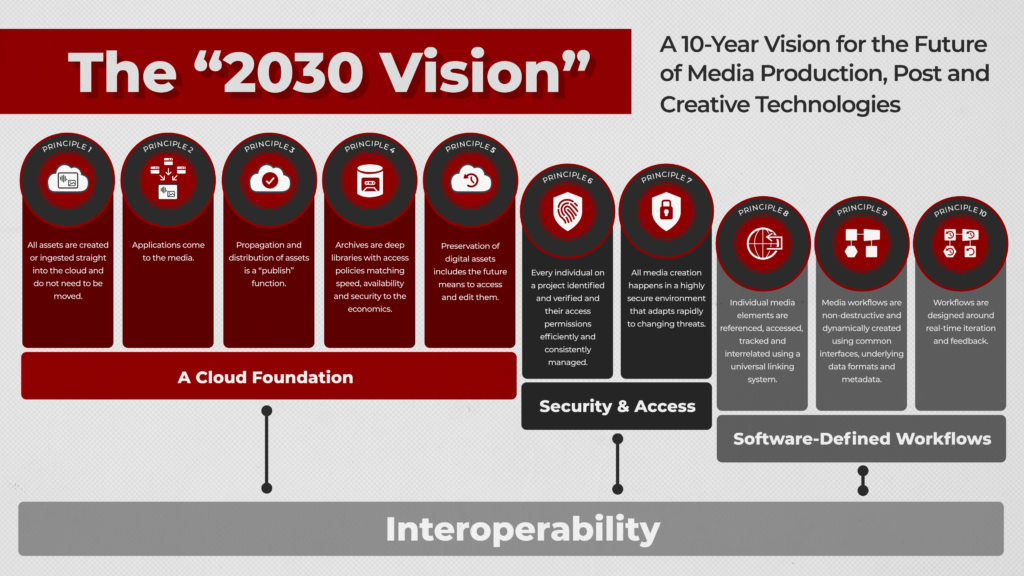

In 2019, MovieLabs, a not-for-profit group founded by the 5 major Hollywood Studios released a whitepaper called “The 2030 Vision”. This paper detailed the 10 Principles that each of the studios agree is how they want to usher in the next generation of Hollywood content creation.

What are these 10 principles, why should you care, and who the heck is MovieLabs?

Let’s start there.

1. What is Movielabs?

Paramount Pictures, Sony Pictures Entertainment, Universal Studios, Walt Disney Pictures and Television, and Warner Bros. Entertainment are part of a joint research and development effort called MovieLabs. As an independent, not-for-profit organization, Movielabs was tasked with defining the future workflows of the member studios and developing strategies to get there.

This led to their authoring of the “2030 Vision Paper”.

2030 Vision Overview

As Movielabs is also charged with evangelizing these 2030 goals, they’ve become the Pied Pipers for the future media workflows of the Hollywood studio system.

Now, what most folks don’t realize is that a vast majority of Hollywood is risk-averse.

Changing existing, predictable (and budgetable) workflows doesn’t happen until a strong case can be made for significant time and cost savings. Without a defined goal to work towards, studio productions would most likely continue on the path they’ve been on, and only iterating incrementally.

So, defining these goals and getting buy-in from each studio meant that there would be a joint effort to realize this 2030 vision. It also gave all the companies who provide technology to the studios a broad roadmap to develop against.

Essentially, everyone sees the blinking neon sign of the 2030 Vision Paper, and everyone is making their way through the fog to get to that sign.

The 2030 Vision Paper is directly influencing the way hundreds of millions of dollars are – and will – be spent.

So, what exactly does this manifesto say?

2. A New Cloud Foundation

The 10 core principles of the Movielabs 2030 Vision Paper can be organized into 3 broad categories: A New Cloud Foundation, Security & Access, and Software-defined Workflows.

The first half of these 10 principles relate directly to the cloud.

This means that any audio and video being captured go directly to the cloud – and stay there. And it’s not just captured content, it’s also supporting files like scripts and production notes. Captured content can either be beamed directly to the cloud or saved locally and THEN immediately sent to the cloud. This media can be a mix of camera or DIT-generated proxies and high-resolution camera originals, plus any audio assets.

As of now, an overwhelming majority of productions are saving all content locally. Multiple copies and versions are then made from these local camera originals. Then, the selected content is moved to the cloud. The cloud is usually not the first, nor primary repository for storage.

Having everything in the cloud is done for one big reason: if all the assets – from production to post-production – are in a place that anyone with permission in the world can access, then we no longer have islands of the same media replicated in multiple places, whether it be on multiple cloud storage pools or sitting on storage at some facility.

We’d save a metric [bleep]-ton of time lost by copying files, waiting for files to be sent to us, and the cost to each facility for storing everything locally.

Of course, having the media in the cloud does present some obvious challenges. How do we edit, color grade, perform high-resolution VFX, or mix audio with content sitting in the cloud?

That’s where Principle #2 comes into play.

Most local software applications for professional creatives are built on the concept that the media’s assets are local, either on a hard drive connected to your computer or on some kind of network-shared storage. Accessing the cloud for all media introduces increased lag and reduced bandwidth that most applications are just not built for.

Despite this, one of the many tech questions answered by the pandemic was “What is the viability of creatively manipulating content when you don’t have the footage locally?”

The answer is that in many cases, it’s totally doable. Companies like LucidLink thrived by enabling cloud storage with media to be used and shared by remote creatives in different locations. LucidLink was the glue that enabled applications to come to the media and not the other way around.

Now, in most cases, we still need to use proxies, rather than high-res files, for most software tools. Editing, iterating, and viewing high-res material that’s sitting in the cloud is still difficult unless you have a tailored setup. Luckily, we still have 7 years before 2030 – and advancements on this front are constantly evolving.

Working with everything while it’s in the cloud also means that when it’s time to release your blockbuster, you can simply point to the finished versions in the cloud – no need to upload new versions.

That’s where Principle #3 comes in.

This means no more waiting for the changes you’ve made on a local machine to export and then re-upload, then generate new links and metadata, as well as the laundry list of other things that can go wrong during versioning. The cloud will process media faster in most cases, as you can effectively “edit in place”.

The promise is that of time savings and less room for error.

Moving on, Principle #4 gets a bit wordy, but it’s necessary.

Archives generally house content that is saved for long-term preservation or for disaster recovery, and should rarely, if ever, be accessed. This is because archived material is typically saved on storage that is cheaper per Terabyte, but with the caveat that restoring any of that archived content will take time, and thus money.

This philosophy is as true for on-premises storage as it is for cloud storage. It can simply be cost-prohibitive to have massive amounts of content on fast storage when access to it is infrequent.

So, Principle #4 is saying that not everyone uses archives the same way, and if you do need to access that content in the cloud somewhere down the road, your archive should be set up in a way that makes financial sense for the frequency of access, while also ensuring that secure user permissions are granted to those who understand this balance.

Principle #4 also dovetails nicely into a major consideration with archived assets. Ya know, making more money!

Sooner or later, an executive is gonna get the great-and-never-thought-of-before idea to re-monetize archived assets. Whether it’s a sequel, reboot, or super-duper special anniversary edition, you’re going to need access to that archived content. Our next principle provisions for this.

Having content in a single location that you’re allowed to see is one thing, but how you accurately search for and then retrieve what you need is, for now, a challenging problem.

Let’s take this one step further: Years or even decades may have passed since the content was archived, and modern software may not be able to read the data properly.

Some camera RAW formats, as the paper points out, are proprietary to each camera manufacturer and need to be DeBayered using their proprietary algorithms.

So, do we convert the files to something more universal (for now) or simply archive the software that can debayer the files?

We simply don’t have a way to 100% futureproof every bit of content.

What I’m getting at here is that the content has to be fully accessible and actionable – all in the cloud.

3. Security & Access

OK, Great, stuff is in the cloud, but how do I make sure that only the right people have access to it?

OK, Great, stuff is in the cloud, but how do I make sure that only the right people have access to it?

Slow down, and take a breath, as Security and Access are one of the 3 areas of attention in the 2030 Vision paper.

Peeling the onion layers back, this will require the industry to devise and implement a mechanism to identify and validate every person who has access to an asset on a production, which we’ll call a “Production User ID”.

This would be for all creatives, executives, and anyone else involved in the production. This “Production User ID” would allow that verified person to access or edit specific assets in the cloud for that production.

This also paves the way for permissions and rights that are based on the timing of the project.

As the 2030 Vision Paper calls out, “A colorist may not need access to VFX assets but does need final composited frames; a dubbing artist may need two weeks’ access to the final English master and the script but does not need access to the final audio stems; and so on.”

As Hollywood frequently employs freelancers – and executives change jobs – this also allows for users to have their access revoked when their time on the production is over.

In theory, tying this to a “Production User ID” means all permissions for any Production you work on are administered to your single ID. You wouldn’t have to remember even more usernames and passwords, which should make all of you support and I.T. folks watching and reading this a bit happier.

As you can imagine, accessing the content doesn’t mean it’s 100% protected while you’re using it.

This is where I hope a biometric approach to security takes off sooner rather than later, as the addition of new security layers on top of aging precautionary measures is often incredibly frustrating. Multiple verification systems also increase the chance of systems not working properly with one another, which is a headache for everyone…and your IT folk.

This is where I hope a biometric approach to security takes off sooner rather than later, as the addition of new security layers on top of aging precautionary measures is often incredibly frustrating. Multiple verification systems also increase the chance of systems not working properly with one another, which is a headache for everyone…and your IT folk.

Movielabs suggests “Security by design;’ which means designing systems where security is a foundational component of system design – not a bolt-on after the fact. There is also the expectation that safeguards will be deployed to handle predictive threat detection and that hopefully this will negate the need for 3rd party security audits, which is a massive headache and time suck.

The security model also assumes that any user at any time could be compromised. Yes, this includes you, executives. You are not above the law. This philosophy is also known as “Zero Trust”, and if you had my parents, you’d totally understand the concept of “zero trust”.

The last Principle in the “Security and Access” section is #8:

If you’ve done any kind of work with proxies, or managed multiple versions of a file, then you’ve dealt with the nightmare that is relinking files. Changes in naming conventions, timecode, audio channels, and even metadata can cause your creative application du jour to throw “Media Offline” gang signs.

If you’ve done any kind of work with proxies, or managed multiple versions of a file, then you’ve dealt with the nightmare that is relinking files. Changes in naming conventions, timecode, audio channels, and even metadata can cause your creative application du jour to throw “Media Offline” gang signs.

And this isn’t just for media files. Supporting documents like scripts, project files, or sidecar metadata files can frequently have multiple versions.

This means that in addition to needing a “Production User ID” per person, we also need a unique way to identify every single media asset on a production; and every user with permission needs to be able to relink to that asset inside the application they’re using.

That’s a pretty tall order.

The upside is that you shouldn’t have to send files to other creatives – you simply send a link to the media, or even just to the project file, which already links to the media.

The goal is also to have this universal, relinking identifier functioning across multiple cloud providers. The creative application would then work in the background with the various cloud providers to use the most optimized version of the media for where and what the creative is doing.

Oh yeah, all of this should be completely transparent to the user.

4. Software Defined Workflows

The last 2 principles are centered around using software to define our production and post-production workflows.

This principle covers two huge areas. The first is a common “ontology” – that is, a unified set of terminology and metadata, as well as common industry API.

This principle covers two huge areas. The first is a common “ontology” – that is, a unified set of terminology and metadata, as well as common industry API.

Consider this: You’ve been hired to work on The Fast and Furious 27, and there is, unpredictably, a flashback sequence.

You need access to all media from the 26 previous movies to find clips of Vin Diesel, err Dom, sitting in a specific car at night, smiling. Have the 26 previous movies been indexed second by second, so you can search for that exact circumstance? How do you filter search results to see if he’s sitting in a car or standing next to it? How do you filter out if the car is in pristine shape or riddled with bullet holes after a high-speed shootout with a fighter jet?

As an industry, don’t have “connected ontologies” meaning, we can’t even agree that the term for something, say, in a production sense is the exact same thing in a CGI sense. If we can’t even agree on unified terms for things, how we can label them so you can then search for them while working on the obvious Oscar bait Fast and Furious 27?

The second ask is a common industry API or “Application Programming Interface” that all creative software applications use.

This allows the software to be built in such a way that it can be slotted into modular workflows. This is meant to combat compatibility issues between legacy tools and emerging workflows…and reduce downtime due to siloed tech solutions. While each modular software solution can be specialized, there will also be a minimum set of data, metadata, and format support that other software modules in the production workflow can understand. This also means that because of this base level of compatibility, all creative functions will be non-destructive.

Wait, you may be thinking…how in the world is this accomplished?

All changes made during the production and post-production process will be saved as metadata. This metadata can be used against the original camera files. This provides not only the ultimate in media fidelity but also the ability to peel back the metadata layers at any time to iterate.

We wrap up Principle #10:

I get this; no one likes to wait.

I get this; no one likes to wait.

When and if Principle #10 is realized, it means no more waiting for rendering, whether it be for on-set live visual effects, game engines, or even CGI in post. The processing in the cloud will negate the need to wait for renders. Feedback and iteration can be done in a significantly shorter amount of time. Creative decisions onset can be made in the moment rather than based on renders weeks or months later.

The 2030 Vision Paper also suggests this would limit post-production time – as well as budget – as more of each would be shifted towards visualizations in pre-photography.

5. Are We On Track?

Yes…ish?

There are a ton of moving parts – with both business and technology partners – that Movielabs annually checks the industry’s progress towards the 2030 vision; and Movielabs provides a gap analysis current state of the industry and the work that remains.

Without question, our industry has made advancements in areas like camera-to-cloud capture, VFX turnovers, real-time rendering, and creative collaboration tools that are all cloud-native. The Hollywood Professional Association – or HPA – Tech Retreat this year, which has become the de facto standard for must-attend industry conferences, showcased many Hollywood projects, and technology partners, like AWS, Skywalker, Disney Marvel.

The 10 principles need a foundation to build upon, and that means getting this cloud thing handled first. And that’s just what we saw.

Principles 1, 2, and 3 were the predominant Principles that large productions attempted to tackle first, with a smattering of “Software Defined Workflow” progress.

However, these case studies also reflected where we do need to make more progress, as we are already 4 years into this 2030 vision. These gaps include:

- Interoperability gaps, where tasks, teams, and organizations still rely on manual handoffs, that can lead to potential errors and inefficiencies.

- Custom point-to-point implementations dominate, making integrations complex.

- Open, interoperable formats and data models are lacking.

- Standard interfaces for workflow control and automation are absent.

- Metadata maintenance is inconsistent, and common metadata exchange is missing.

We also have gaps in operational support, where workflows are complex and often span multiple organizations, systems, or platforms.

- There’s a gap between understanding cloud-based IT infrastructures and media workflows. All files are not created equal and media needs specialized expertise over traditional IT.

- Support models need to match the industry’s unique requirements, considering its global and round-the-clock nature.

If we take a look at Change Management, we do run into fundamental problems with a move of this scale.

- This technology is new, and constantly evolving, and it’s only being spearheaded by the studios and supporting technology partners. This means few creatives have actually tried and deployed new ‘2030 workflows.’

- Plus, managing this change involves educating stakeholders, involving creatives earlier, plus demonstrating the 2030 vision value, and measuring its benefits.

I’m sure you have some input on one or more of these 5 THINGS. Let me know in the comments section. Also, please subscribe and share this tech goodness with the rest of your techie friends.

5 THINGS is also available as a video or audio-only podcast, so search for it on your podcast platform du jour. Look for the red logo!

![]()

Until the next episode: learn more, do more.

Like early, share often, and don’t forget to subscribe.

Editing & Motion Graphics: Amy at AwkwardAnthems.

36集单集